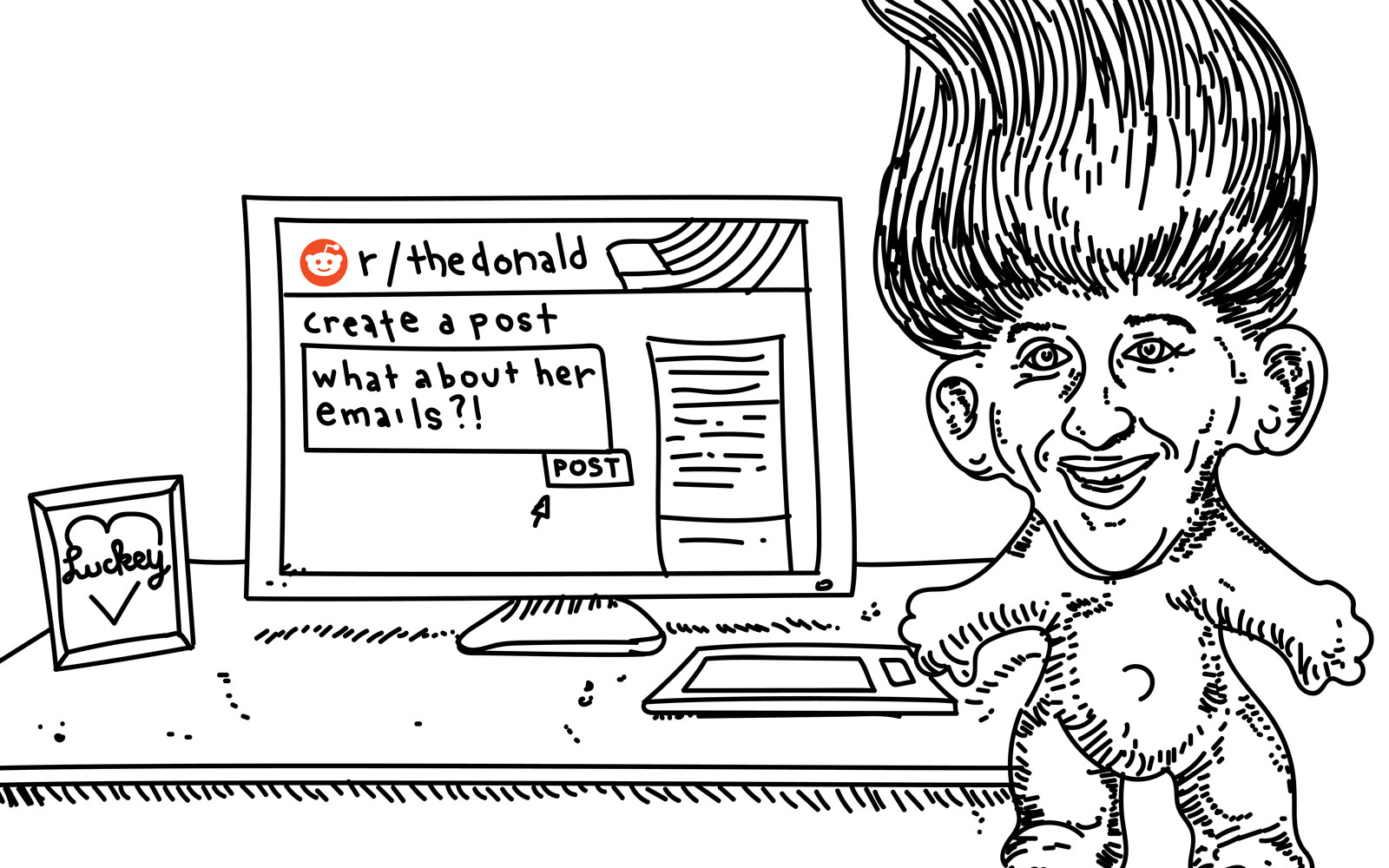

We imagine the scene at Facebook right now is like Kim Jong-il's funeral. Employees weeping in hallways, dripping anguished snot onto keyboards, beating their chests with unsold Facebook phones in an orgy of anguish at the injustice of media coverage regarding Mark Zuckerberg's unprompted defense this week of giving Holocaust deniers a voice on the platform. But I think we've finally figured out what's going on at Facebook after all. You know that guy. The one who pops into a chill online community and makes everyone miserable. The one who says he's "just asking questions" about women able to do math, black people and evolution, shooting victims and paid actors, the validity of the Holocaust. He's the one that mods have to kick out for "JAQing off" ("Just Asking Questions") because he clearly has bad intentions to harm the community and recruit hate. The troll who feigns naïveté and uses free speech as a foil. This week we learned that if you give that guy a platform for his voice, he'll out himself real fast. Right now, headlines blare Zuckerberg in Holocaust denial row and Fortune 500 C.E.O. Says Holocaust Deniers Must Be Given "a Voice". To be clear, on Tuesday Zuckerberg gave a wandering kid-glove interview with Kara Swisher of Recode, the same day Facebook's representatives went to the mat to avoid telling the House Judiciary Committee exactly how InfoWars gets to stay on Facebook while it pretends to decry hate speech. Zuckerberg told Recode that Facebook won't ban Holocaust deniers or race-war conspiracy propagators like InfoWars just because they're "getting it wrong." Also, booting them would go against his and Facebook's "responsibility" to "give people a voice." Even in his next-day backtracking, Mr. Zuckerberg and his company doubled-down on giving that guy a safe space, a voice, and a platform. As Matt Ford at The Atlantic tweeted, in the original interview Zuckerberg wasn't even asked about his company's policy of fostering Holocaust denial, "he just said he'd keep it on Facebook on his own." Then came the headlines. Quickly followed by Mark Zuckerberg pulling a Trump, telling his softball interviewer that he misspoke. "I personally find Holocaust denial deeply offensive, and I absolutely didn't intend to defend the intent of people who deny that," he wrote in a warm personal email to Kara Swisher. We imagine loyal Facebook employees on the floor in the breakroom, tearing up chunks of rubber floor mats and chewing them, swallowing through their own howls and moans, sobbing. "No one understands what Mark really means," they cry. But we all know that one way to double-down is to split hairs. It's the hallmark of trolling. It's what that guy is really good at. Nowhere is this more clear than this week's Channel Four (UK) Dispatches episode Inside Facebook: Secrets of the Social Network. The episode aired Tuesday and received little coverage in the US. Before you click that, keep in mind a content warning about the subject of a toddler being violently beaten in a video kept on Facebook since 2012. "The video is used during the undercover reporter's training period as an example of what would be left up on the site, and marked as disturbing, unless posted with a celebratory caption," wrote Channel Four in a news release about their investigation. "The video is still up on the site, without a graphic warning, nearly six years later." In response to the TV show, "Facebook told Dispatches they do escalate these issues and contact law enforcement, and the video should have been removed." Still, Dispatches discovered "Pages belonging to far-right groups, with large numbers of followers, [are] allowed to exceed deletion threshold, and subject to different treatment in the same category as pages belonging to governments and news organisations." Meanwhile, it also found that "Policies allowing hate speech towards ethnic and religious immigrants, and trainers instructing moderators to ignore racist content in accordance with Facebook's policies." The undercover investigation found something that might explain why Facebook is fighting for the rights of InfoWars and Holocaust deniers: A moderator tells the undercover reporter that the far-right group Britain First's pages were left up despite repeatedly featuring content that breached Facebook's guidelines because, "they have a lot of followers so they're generating a lot of revenue for Facebook." The Britain First Facebook page was finally deleted in March 2018 following the arrest of deputy leader Jayda Fransen. Facebook confirmed to Dispatches that they do have special procedures for popular and high profile pages, which includes [jailed former English Defence League leader] Tommy Robinson and included Britain First. So Facebook has rules, they're just different for popular extremists. Unless it's sex, of course, which is the only thing that Facebook will categorically censor, shut down, forbid, and eject as being a bad thing. As seen in this week's Motherboard scoop Leaked Documents Show Facebook's 'Threshold' for Deleting Pages and Groups, human sexuality is another topic governed by different rules than anything else. The company's motto might as well be "Make war, not love." Facebook has played fast and loose with using "free speech" as a moving goalpost with which they can keep hate groups as active users on their site. Perhaps with the revelations of Trump-PAC Facebook ads this week, coming out number one in spending ($274,000) and reach (37 million people), that's Facebook's majority users. Reddit has played the same game to extremes, making a safe space, a platform and voice for people to use "free speech" or "just ask questions" in demonstrably bad faith. Yet here is where we've found the line. A real, definable instance in which a company is explicitly defending its intent to give a platform to hate groups and extremists for recruiting and spreading their messages. Unlike Facebook, some moderators on Reddit have figured out how to spot people who want to harm the community (bigots making spaces so toxic people leave) and recruit to extremist ideologies. The mods at r/AskHistorians, an actual, non-toxic place on Reddit, have active measures in place to stop hate and radicalization. They've been dealing with Holocaust deniers for a long time. Their measures, which work, are a zero-tolerance policy, the literal opposite of what Zuckerberg said to Recode and in his follow-up backpedaling. The opposite of what Facebook is doubling-down on as a policy. There's a difference between creating awareness about hate and actively spreading it, which is something both Facebook and Reddit utterly fail to comprehend in their reductive free-market-of-ideas approach to censorship. Facebook is a company that advertises itself as best-in-class for reaching people and selling them stuff. Giving hate groups a "voice" legitimizes their rhetoric. It allows them to flourish. In case you have any doubts left, Facebook and Reddit both have made, coddled, defended, and cushioned every single "that guy" who has turned what was once a fun internet into a really shitty place no one wants to be anymore. And there's a horrifying amount of hard evidence that it has direct real-life consequences, like this study which found a direct link between hate on Facebook and subsequent violent attacks on immigrant groups in both Germany and the US. Yet while that guy usually uses sock puppets to turn hate into action, as he JAQs off into everyone's faces, in the case of Facebook's CEO, we now know the identity of at least one of those guys.

via Engadget RSS Feed https://ift.tt/2uRJgR7 |

Comments

Post a Comment